This project was completed in collaboration with

Corinna Hirt and

Kalle Reiter

at the

HfG Schwäbisch Gmünd.

The aim of the course (Invention Design,

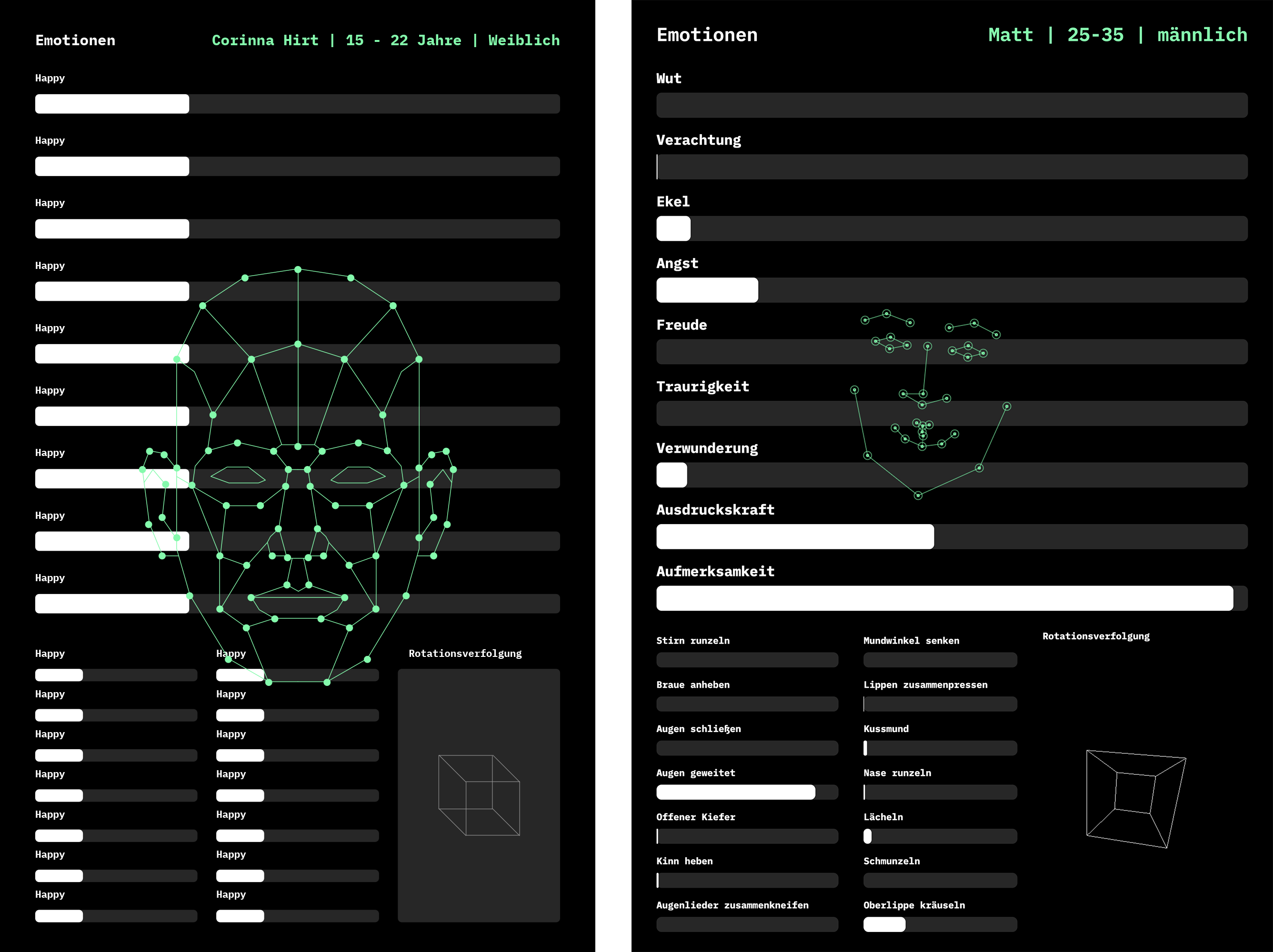

Prof. Jörg Beck) is to research future-focused technologies and design for interactions that may arise when these technologies mature. An emphasis was placed on anticipating the societal impact of these technologies and accounting for potential pitfalls in our designs. For this project, Kalle, Corinna, and I decided to research artifical intelligence and machine learning technologies.

Our project converged around the concept of adaptive user interfaces.

An Adaptive User Interface (AUI) is a user interface that dynamically adjusts its layout, elements, functionality, and/or content to a given user's needs, capabilities, and context of use.